一、概述

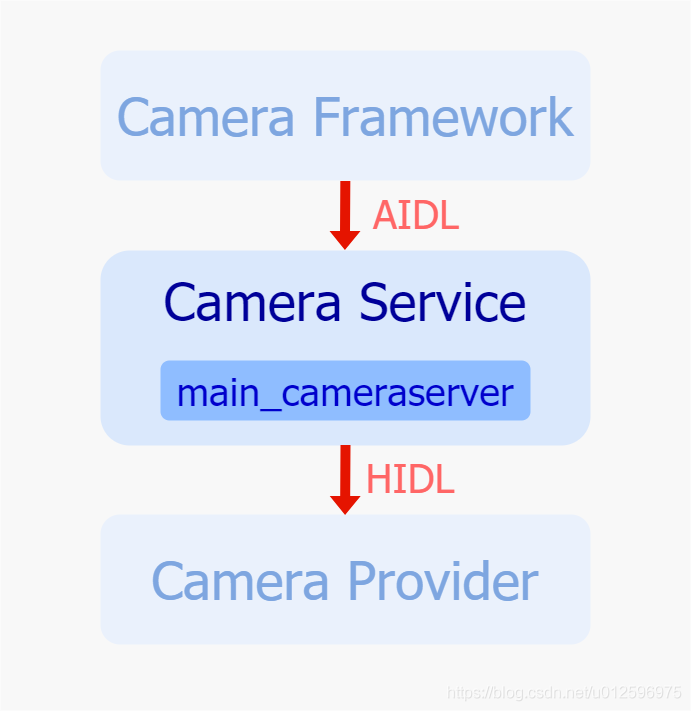

Camera Service被设计成一个独立进程,作为一个服务端,处理来自Camera Framework 客户端的跨进程请求,并在内部进行一定的操作,随后作为客户端将请求再一次发送至作为服务端的Camera Provider,整个流程涉及到了两个跨进程操作,前者通过AIDL机制实现,后者通过HIDL机制实现,由于在于Camera Provider通信的过程中,Service是作为客户端存在的,所以此处我们重点关注AIDL以及Camera Service 主程序的实现。

二、Camera AIDL 接口

在介绍Camera AIDL之前,不妨来简单了解下何为AIDL,谷歌为什么要实现这么一套机制?

在Android系统中,两个进程通常无法相互访问对方的内存,为了解决该问题,谷歌提出了Messager/广播以及后来的Binder,来解决这个问题,但是如果某个进程需要对另一个进程中进行多线程的并发访问,Messager和广播效果往往不是很好,所以Binder会作为主要实现方式,但是Binder的接口使用起来比较复杂,对开发者特别是初学者并不是很友好,所以为了降低跨进程开发门槛,谷歌开创性地提出了AIDL(自定义语言)机制,主动封装了Binder的实现细节,提供给开发者较为简单的使用接口,极大地提升了广大开发者的开发效率。

按照谷歌的针对AIDL机制的要求,需要服务端创建一系列*.aidl文件,并在其中定义需要提供给客户端的公共接口,并且予以实现,接下来我们来看下几个主要的aidl文件。

2.1 ICameraService.aidl

ICameraService.aidl定义了ICameraService 接口,实现主要通过CameraService类来实现,主要接口如下:

- getNumberOfCameras: 获取系统中支持的Camera 个数

- connectDevice():打开一个Camera 设备

- addListener(): 添加针对Camera 设备以及闪光灯的监听对象

[->frameworks\av\camera\aidl\android\hardware\ICameraService.aidl]

1 | interface ICameraService |

2.2 ICameraDeviceCallbacks.aidl

ICameraDeviceCallbacks.aidl文件中定义了ICameraDeviceCallbacks接口,其实现主要由Framework中的CameraDeviceCallbacks类进行实现,主要接口如下:

- onResultReceived: 一旦Service收到结果数据,便会调用该接口发送至Framework

- onCaptureStarted(): 一旦开始进行图像的采集,便调用该接口将部分信息以及时间戳上传至Framework

- onDeviceError(): 一旦发生了错误,通过调用该接口通知Framework

[->frameworks\av\camera\aidl\android\hardware\camera2\ICameraDeviceCallbacks.aidl]

1 | interface ICameraDeviceCallbacks |

2.3 ICameraDeviceUser.aidl

ICameraDeviceUser.aidl定义了ICameraDeviceUser接口,由CameraDeviceClient最终实现,主要接口如下:

- disconnect: 关闭Camera 设备

- submitRequestList:发送request

- beginConfigure: 开始配置Camera 设备,需要在所有关于数据流的操作之前

- endConfigure: 结束关于Camera 设备的配置,该接口需要在所有Request下发之前被调用

- createDefaultRequest: 创建一个具有默认配置的Request

[->frameworks\av\camera\aidl\android\hardware\camera2\ICameraDeviceUser.aidl]

1 | interface ICameraDeviceUser |

2.4 ICameraServiceListener.aidl

ICameraServiceListener.aidl定义了ICameraServiceListener接口,由Framework中的CameraManagerGlobal类实现,主要接口如下:

- onStatusChanged: 用于告知当前Camera 设备的状态的变更

- onCameraOpened: 用于告知当前Camera打开

- onCameraClosed:用于告知当前Camera关闭

[->frameworks\av\camera\aidl\android\hardware\ICameraServiceListener.aidl]

1 | interface ICameraServiceListener |

三、Camera Service

Camera Service 主程序,是随着系统启动而运行,主要目的是向外暴露AIDL接口给Framework进行调用,同时通过调用Camera Provider的HIDL接口,建立与Provider的通信,并且在内部维护从Framework以及Provider获取到的资源,并且按照一定的框架结构保持整个Service在稳定高效的状态下运行,所以接下来我们主要通过初始化过程以及处理来自应用的请求来详细介绍下。

3.1 启动初始化

3.1.1 cameraserver.rc

启动依赖cameraserver.rc配置启动

[->frameworks\av\camera\cameraserver\cameraserver.rc]

1 | service cameraserver /system/bin/cameraserver |

3.1.2 main_cameraserver.cpp

执行main_cameraserver中的main函数

[->frameworks\av\camera\cameraserver\main_cameraserver.cpp]

1 | int main(int argc __unused, char** argv __unused) |

3.1.3 CameraService::onFirstRef

执行instantiate后会调用到CameraService的onFirstRef方法

[->frameworks\av\services\camera\libcameraservice\CameraService.cpp]

1 | void CameraService::onFirstRef() |

3.1.4 CameraService::enumerateProviders

实例化CameraProviderManager对象,并进行初始化

1 | status_t CameraService::enumerateProviders() { |

3.1.5 mCameraProviderManager::initialize

[->frameworks/av/services/camera/libcameraservice/common/CameraProviderManager.cpp]

CameraProviderManager初始化

1 | status_t CameraProviderManager::initialize(wp<CameraProviderManager::StatusListener> listener, |

3.1.6 addProviderLocked

[->frameworks/av/services/camera/libcameraservice/common/CameraProviderManager.cpp]

1 | status_t CameraProviderManager::addProviderLocked(const std::string& newProvider) { |

3.1.7 ProviderInfo::initialize

[->frameworks/av/services/camera/libcameraservice/common/CameraProviderManager.cpp]

1 | status_t CameraProviderManager::ProviderInfo::initialize( |

3.1.7 addDevice

添加device

1 | status_t CameraProviderManager::ProviderInfo::addDevice(const std::string& name, |

3.1.7.1 initializeDeviceInfo

1 | template<class DeviceInfoT> |

3.1.7.2 startDeviceInterface

1 | template<> |

3.1.7.3 new DeviceInfo3

1 | CameraProviderManager::ProviderInfo::DeviceInfo3::DeviceInfo3(const std::string& name, |

3.1.8 小结

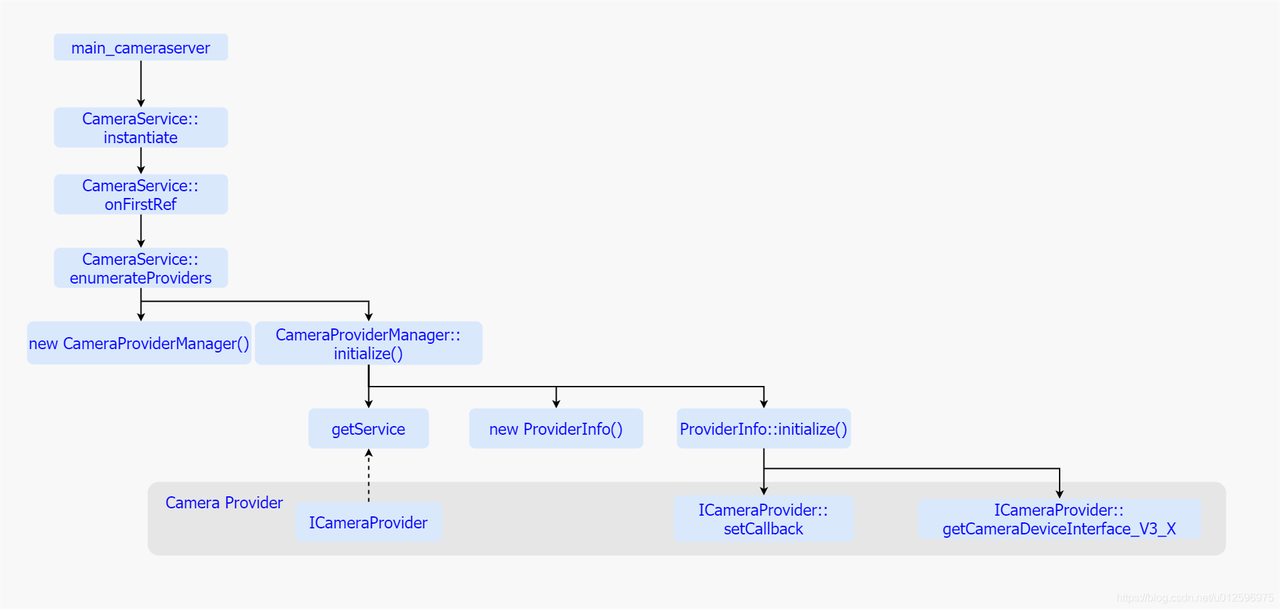

当系统启动的时候会首先运行main_cameraserver程序,紧接着调用了CameraService的instantiate方法,该方法最终会调用到CameraService的onFirstRef方法,在这个方法里面便开始了整个CameraService的初始化工作。

而在onFirstRef方法内又调用了enumerateProviders方法,该方法中主要做了两个工作:

- 一个是实例化一个CameraProviderManager对象,该对象管理着有关Camera Provider的一些资源。

- 一个是调用CameraProviderManager的initialize方法对其进行初始化工作。

而在CameraProviderManager初始化的过程中,主要做了三件事:

- 首先通过getService方法获取ICameraProvider代理。

- 随后实例化了一个ProviderInfo对象,之后调用其initialize方法进行初始化。

- 最后将ProviderInfo加入到一个内部容器中进行管理。

而在调用ProviderInfo的initialize方法进行初始化过程中存在如下几个动作:

- 首先接收了来自CameraProviderManager获取的ICameraProvider代理并将其存入内部成员变量中。

- 其次由于ProviderInfo实现了ICameraProviderCallback接口,所以紧接着调用了ICameraProvider的setCallback将自身注册到Camera Provider中,接收来自Provider的事件回调。

- 再然后,通过调用ICameraProvider代理的getCameraDeviceInterface_V3_X接口,获取Provider端的ICameraDevice代理,并且将这个代理作为参数加入到DeviceInfo3对象实例化方法中,而在实例化DeviceInfo3对象的过程中会通过ICameraDevice代理的getCameraCharacteristics方法获取该设备对应的属性配置,并且保存在内部成员变量中。

- 最后ProviderInfo会将每一个DeviceInfo3存入内部的一个容器中进行统一管理,至此整个初始化的工作已经完成。

通过以上的系列动作,Camera Service进程便运行起来了,获取了Camera Provider的代理,同时也将自身关于Camera Provider的回调注册到了Provider中,这就建立了与Provider的通讯,另一边,通过服务的形式将AIDL接口也暴露给了Framework,静静等待来自Framework的请求。

3.2 处理应用请求

一旦用户打开了相机应用,便会去调用CameraManager的openCamera方法进而走到Framework层处理,Framework通过内部处理,最终将请求下发到Camera Service中,而在Camera Service主要做了获取相机设备属性、打开相机设备,然后App通过返回的相机设备,再次下发创建Session以及下发Request的操作,接下来我们来简单梳理下这一系列请求在Camera Service中是怎么进行处理的。

3.2.1 获取属性

对于获取相机设备属性动作,逻辑比较简单,由于在Camera Service启动初始化的时候已经获取了相应相机设备的属性配置,并存储在DeviceInfo3中,所以该方法就是从对应的DeviceInfo3中取出属性返回即可。

1 | status_t CameraProviderManager::getCameraCharacteristics(const std::string &id, |

3.2.2 打开相机

对于打开相机设备动作,主要由connectDevice来实现(详细流程见深入理解Camera架构一),内部实现比较复杂,下面详细看下

3.2.2.1 CameraService::connectDevice

1 | Status CameraService::connectDevice( |

3.2.2.2 CameraService::connectHelper

1 | Status CameraService::connectHelper(const sp<CALLBACK>& cameraCb, const String8& cameraId, |

3.2.2.3 CameraService::makeClient

1 | Status CameraService::makeClient(const sp<CameraService>& cameraService, |

[->frameworks\av\services\camera\libcameraservice\api2\CameraDeviceClient.cpp]

1 | // Interface used by CameraService |

new Camera3Device

[->frameworks\av\services\camera\libcameraservice\Camera3Device.cpp]

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47Camera3Device::Camera3Device(const String8 &id, const String16& clientPackageName):

sizePerFace(3),

faceNumPerGroup(10),

sizePerFaceGroup(sizePerFace * faceNumPerGroup),

instaTagBase(0),

instaTagSection("org.qti.camera.intro"),

instaFirstTagName("instaInputMetadata"),

inputMetadata(9),

mId(id),

mOperatingMode(NO_MODE),

mIsConstrainedHighSpeedConfiguration(false),

mStatus(STATUS_UNINITIALIZED),

mStatusWaiters(0),

mUsePartialResult(false),

mNumPartialResults(1),

mTimestampOffset(0),

mNextResultFrameNumber(0),

mNextReprocessResultFrameNumber(0),

mNextZslStillResultFrameNumber(0),

mNextShutterFrameNumber(0),

mNextReprocessShutterFrameNumber(0),

mNextZslStillShutterFrameNumber(0),

mListener(NULL),

mVendorTagId(CAMERA_METADATA_INVALID_VENDOR_ID),

mLastTemplateId(-1),

mNeedFixupMonochromeTags(false)

{

ATRACE_CALL();

packge_name = clientPackageName;

if (!strcmp(String8(packge_name).string(), "com.alibaba.dingtalk.focus")) {

property_set("vendor.select.mulicamera", "1");

}

if (!strcmp(String8(packge_name).string(), "com.tencent.wemeet.rooms")) {

property_set("vendor.select.mulicamera", "1");

}

if (!strcmp(String8(packge_name).string(), "com.ss.meetx.room")) {

property_set("vendor.select.mulicamera", "1");

}

isLogicCam = mId == "3";

char value[PROPERTY_VALUE_MAX];

property_get("vendor.select.mulicamera", value, "0");

bool enableMultiCam = atoi(value) == 1;

if (enableMultiCam && !isLogicCam) {

isLogicCam = (mId == "0") || (mId == "1");

}

ALOGD("%s: %s created device for camera %s", __FUNCTION__, String8(clientPackageName).c_str(), mId.string());

}

3.2.2.4 CameraDeviceClient::initialize

1 | status_t CameraDeviceClient::initialize(sp<CameraProviderManager> manager, |

- Camera2ClientBase::initialize

1 | template <typename TClientBase> |

new FrameProcessorBase

[->frameworks\av\services\camera\libcameraservice\common\FrameProcessorBase.cpp]

1

2

3

4

5

6

7

8

9

10

11

12

13FrameProcessorBase::FrameProcessorBase(wp<CameraDeviceBase> device) :

Thread(/*canCallJava*/false),

mDevice(device),

mNumPartialResults(1) {

sp<CameraDeviceBase> cameraDevice = device.promote();

if (cameraDevice != 0) {

CameraMetadata staticInfo = cameraDevice->info();

camera_metadata_entry_t entry = staticInfo.find(ANDROID_REQUEST_PARTIAL_RESULT_COUNT);

if (entry.count > 0) {

mNumPartialResults = entry.data.i32[0];

}

}

}Camera3Device::initialize

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231status_t Camera3Device::initialize(sp<CameraProviderManager> manager, const String8& monitorTags) {

ATRACE_CALL();

Mutex::Autolock il(mInterfaceLock);

Mutex::Autolock l(mLock);

ALOGD("%s: Initializing HIDL device for camera %s", __FUNCTION__, mId.string());

if (mStatus != STATUS_UNINITIALIZED) {

CLOGE("Already initialized!");

return INVALID_OPERATION;

}

if (manager == nullptr) return INVALID_OPERATION;

sp<ICameraDeviceSession> session;

ATRACE_BEGIN("CameraHal::openSession");

//获取ICameraDeviceSession代理

status_t res = manager->openSession(mId.string(), this,

/*out*/ &session);

ATRACE_END();

if (res != OK) {

SET_ERR_L("Could not open camera session: %s (%d)", strerror(-res), res);

return res;

}

res = manager->getCameraCharacteristics(mId.string(), &mDeviceInfo);

if (res != OK) {

SET_ERR_L("Could not retrieve camera characteristics: %s (%d)", strerror(-res), res);

session->close();

return res;

}

std::vector<std::string> physicalCameraIds;

bool isLogical = manager->isLogicalCamera(mId.string(), &physicalCameraIds);

if (isLogical) {

for (auto& physicalId : physicalCameraIds) {

res = manager->getCameraCharacteristics(

physicalId, &mPhysicalDeviceInfoMap[physicalId]);

if (res != OK) {

SET_ERR_L("Could not retrieve camera %s characteristics: %s (%d)",

physicalId.c_str(), strerror(-res), res);

session->close();

return res;

}

if (DistortionMapper::isDistortionSupported(mPhysicalDeviceInfoMap[physicalId])) {

mDistortionMappers[physicalId].setupStaticInfo(mPhysicalDeviceInfoMap[physicalId]);

if (res != OK) {

SET_ERR_L("Unable to read camera %s's calibration fields for distortion "

"correction", physicalId.c_str());

session->close();

return res;

}

}

}

}

std::shared_ptr<RequestMetadataQueue> queue;

auto requestQueueRet = session->getCaptureRequestMetadataQueue(

[&queue](const auto& descriptor) {

queue = std::make_shared<RequestMetadataQueue>(descriptor);

if (!queue->isValid() || queue->availableToWrite() <= 0) {

ALOGE("HAL returns empty request metadata fmq, not use it");

queue = nullptr;

// don't use the queue onwards.

}

});

if (!requestQueueRet.isOk()) {

ALOGE("Transaction error when getting request metadata fmq: %s, not use it",

requestQueueRet.description().c_str());

return DEAD_OBJECT;

}

std::unique_ptr<ResultMetadataQueue>& resQueue = mResultMetadataQueue;

auto resultQueueRet = session->getCaptureResultMetadataQueue(

[&resQueue](const auto& descriptor) {

resQueue = std::make_unique<ResultMetadataQueue>(descriptor);

if (!resQueue->isValid() || resQueue->availableToWrite() <= 0) {

ALOGE("HAL returns empty result metadata fmq, not use it");

resQueue = nullptr;

// Don't use the resQueue onwards.

}

});

if (!resultQueueRet.isOk()) {

ALOGE("Transaction error when getting result metadata queue from camera session: %s",

resultQueueRet.description().c_str());

return DEAD_OBJECT;

}

IF_ALOGV() {

session->interfaceChain([](

::android::hardware::hidl_vec<::android::hardware::hidl_string> interfaceChain) {

ALOGV("Session interface chain:");

for (const auto& iface : interfaceChain) {

ALOGV(" %s", iface.c_str());

}

});

}

camera_metadata_entry bufMgrMode =

mDeviceInfo.find(ANDROID_INFO_SUPPORTED_BUFFER_MANAGEMENT_VERSION);

if (bufMgrMode.count > 0) {

mUseHalBufManager = (bufMgrMode.data.u8[0] ==

ANDROID_INFO_SUPPORTED_BUFFER_MANAGEMENT_VERSION_HIDL_DEVICE_3_5);

}

mInterface = new HalInterface(session, queue, mUseHalBufManager);

std::string providerType;

mVendorTagId = manager->getProviderTagIdLocked(mId.string());

mTagMonitor.initialize(mVendorTagId);

if (!monitorTags.isEmpty()) {

mTagMonitor.parseTagsToMonitor(String8(monitorTags));

}

// Metadata tags needs fixup for monochrome camera device version less

// than 3.5.

hardware::hidl_version maxVersion{0,0};

res = manager->getHighestSupportedVersion(mId.string(), &maxVersion);

if (res != OK) {

ALOGE("%s: Error in getting camera device version id: %s (%d)",

__FUNCTION__, strerror(-res), res);

return res;

}

int deviceVersion = HARDWARE_DEVICE_API_VERSION(

maxVersion.get_major(), maxVersion.get_minor());

bool isMonochrome = false;

camera_metadata_entry_t entry = mDeviceInfo.find(ANDROID_REQUEST_AVAILABLE_CAPABILITIES);

for (size_t i = 0; i < entry.count; i++) {

uint8_t capability = entry.data.u8[i];

if (capability == ANDROID_REQUEST_AVAILABLE_CAPABILITIES_MONOCHROME) {

isMonochrome = true;

}

}

mNeedFixupMonochromeTags = (isMonochrome && deviceVersion < CAMERA_DEVICE_API_VERSION_3_5);

return initializeCommonLocked();

}

status_t Camera3Device::initializeCommonLocked() {

/** Start up status tracker thread */

mStatusTracker = new StatusTracker(this);

status_t res = mStatusTracker->run(String8::format("C3Dev-%s-Status", mId.string()).string());

if (res != OK) {

SET_ERR_L("Unable to start status tracking thread: %s (%d)",

strerror(-res), res);

mInterface->close();

mStatusTracker.clear();

return res;

}

/** Register in-flight map to the status tracker */

mInFlightStatusId = mStatusTracker->addComponent();

if (mUseHalBufManager) {

res = mRequestBufferSM.initialize(mStatusTracker);

if (res != OK) {

SET_ERR_L("Unable to start request buffer state machine: %s (%d)",

strerror(-res), res);

mInterface->close();

mStatusTracker.clear();

return res;

}

}

/** Create buffer manager */

mBufferManager = new Camera3BufferManager();

Vector<int32_t> sessionParamKeys;

camera_metadata_entry_t sessionKeysEntry = mDeviceInfo.find(

ANDROID_REQUEST_AVAILABLE_SESSION_KEYS);

if (sessionKeysEntry.count > 0) {

sessionParamKeys.insertArrayAt(sessionKeysEntry.data.i32, 0, sessionKeysEntry.count);

}

/** Start up request queue thread */

mRequestThread = new RequestThread(

this, mStatusTracker, mInterface, sessionParamKeys, mUseHalBufManager);

res = mRequestThread->run(String8::format("C3Dev-%s-ReqQueue", mId.string()).string());

if (res != OK) {

SET_ERR_L("Unable to start request queue thread: %s (%d)",

strerror(-res), res);

mInterface->close();

mRequestThread.clear();

return res;

}

mPreparerThread = new PreparerThread();

internalUpdateStatusLocked(STATUS_UNCONFIGURED);

mNextStreamId = 0;

mDummyStreamId = NO_STREAM;

mNeedConfig = true;

mPauseStateNotify = false;

// Measure the clock domain offset between camera and video/hw_composer

camera_metadata_entry timestampSource =

mDeviceInfo.find(ANDROID_SENSOR_INFO_TIMESTAMP_SOURCE);

if (timestampSource.count > 0 && timestampSource.data.u8[0] ==

ANDROID_SENSOR_INFO_TIMESTAMP_SOURCE_REALTIME) {

mTimestampOffset = getMonoToBoottimeOffset();

}

// Will the HAL be sending in early partial result metadata?

camera_metadata_entry partialResultsCount =

mDeviceInfo.find(ANDROID_REQUEST_PARTIAL_RESULT_COUNT);

if (partialResultsCount.count > 0) {

mNumPartialResults = partialResultsCount.data.i32[0];

mUsePartialResult = (mNumPartialResults > 1);

}

camera_metadata_entry configs =

mDeviceInfo.find(ANDROID_SCALER_AVAILABLE_STREAM_CONFIGURATIONS);

for (uint32_t i = 0; i < configs.count; i += 4) {

if (configs.data.i32[i] == HAL_PIXEL_FORMAT_IMPLEMENTATION_DEFINED &&

configs.data.i32[i + 3] ==

ANDROID_SCALER_AVAILABLE_STREAM_CONFIGURATIONS_INPUT) {

mSupportedOpaqueInputSizes.add(Size(configs.data.i32[i + 1],

configs.data.i32[i + 2]));

}

}

if (DistortionMapper::isDistortionSupported(mDeviceInfo)) {

res = mDistortionMappers[mId.c_str()].setupStaticInfo(mDeviceInfo);

if (res != OK) {

SET_ERR_L("Unable to read necessary calibration fields for distortion correction");

return res;

}

}

notifyCameraState(true);

return OK;

}CameraProviderManager::openSession

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42status_t CameraProviderManager::openSession(const std::string &id,

const sp<device::V3_2::ICameraDeviceCallback>& callback,

/*out*/

sp<device::V3_2::ICameraDeviceSession> *session) {

std::lock_guard<std::mutex> lock(mInterfaceMutex);

auto deviceInfo = findDeviceInfoLocked(id,

/*minVersion*/ {3,0}, /*maxVersion*/ {4,0});

if (deviceInfo == nullptr) return NAME_NOT_FOUND;

auto *deviceInfo3 = static_cast<ProviderInfo::DeviceInfo3*>(deviceInfo);

const sp<provider::V2_4::ICameraProvider> provider =

deviceInfo->mParentProvider->startProviderInterface();

if (provider == nullptr) {

return DEAD_OBJECT;

}

saveRef(DeviceMode::CAMERA, id, provider);

Status status;

hardware::Return<void> ret;

auto interface = deviceInfo3->startDeviceInterface<

CameraProviderManager::ProviderInfo::DeviceInfo3::InterfaceT>();

if (interface == nullptr) {

return DEAD_OBJECT;

}

ret = interface->open(callback, [&status, &session]

(Status s, const sp<device::V3_2::ICameraDeviceSession>& cameraSession) {

status = s;

if (status == Status::OK) {

*session = cameraSession;

}

});

if (!ret.isOk()) {

removeRef(DeviceMode::CAMERA, id);

ALOGE("%s: Transaction error opening a session for camera device %s: %s",

__FUNCTION__, id.c_str(), ret.description().c_str());

return DEAD_OBJECT;

}

return mapToStatusT(status);

}

3.2.2.5 小结

对于打开相机设备动作,主要由connectDevice来实现;

当CameraFramework通过调用ICameraService的connectDevice接口的时候,主要做了两件事情:

- 一个是创建CameraDeviceClient。

- 一个是对CameraDeviceClient进行初始化,并将其给Framework。

而其中创建CameraDevcieClient的工作是通过makeClient方法来实现的,在该方法中首先实例化一个CameraDeviceClient,并且将来自Framework针对ICameraDeviceCallbacks的实现类CameraDeviceImpl.CameraDeviceCallbacks存入CameraDeviceClient中,这样一旦有结果产生便可以将结果通过这个回调回传给Framework,其次还实例化了一个Camera3Device对象。

其中的CameraDeviceClient的初始化工作是通过调用其initialize方法来完成的,在该方法中:

- 首先调用父类Camera2ClientBase的initialize方法进行初始化。

- 其次实例化FrameProcessorBase对象并且将内部的Camera3Device对象传入其中,这样就建立了FrameProcessorBase和Camera3Device的联系,之后将内部线程运行起来,等待来自Camera3Device的结果。

- 最后将CameraDeviceClient注册到FrameProcessorBase内部,这样就建立了与CameraDeviceClient的联系。

而在Camera2ClientBase的intialize方法中会调用Camera3Device的intialize方法对其进行初始化工作,并且通过调用Camera3Device的setNotifyCallback方法将自身注册到Camera3Device内部,这样一旦Camera3Device有结果产生就可以发送到CameraDeviceClient中。

而在Camera3Device的初始化过程中,首先通过调用CameraProviderManager的openSession方法打开并获取一个Provider中的ICameraDeviceSession代理,其次实例化一个HalInterface对象,将之前获取的ICameraDeviceSession代理存入其中,最后将RequestThread线程运行起来,等待Request的下发。

而对于CameraProviderManager的openSession方法,它会通过内部的DeviceInfo保存ICameraDevice代理,调用其open方法从Camera Provider中打开并获取一个ICameraDeviceSession远程代理,并且由于Camera3Device实现了Provider中ICameraDeviceCallback方法,会通过该open方法传入到Provider中,接收来自Provider的结果回传。

至此,整个connectDevice方法已经运行完毕,此时App已经获取了一个Camera设备,紧接着,由于需要采集图像,所以需要再次调用CameraDevice的createCaptureSession操作,到达Framework,再通过ICameraDeviceUser代理进行了一系列操作,分别包含了cancelRequest/beginConfigure/deleteStream/createStream以及endConfigure方法来进行数据流的配置。

3.2.3 配置数据流

3.2.3.1 cancelRequest

[->frameworks\av\services\camera\libcameraservice\CameraDeviceClient.cpp]

1 | binder::Status CameraDeviceClient::cancelRequest( |

3.2.3.2 beginConfigure

1 | binder::Status CameraDeviceClient::beginConfigure() { |

3.2.3.3 deleteStream

1 | binder::Status CameraDeviceClient::deleteStream(int streamId) { |

3.2.3.4 createStream

1 | binder::Status CameraDeviceClient::createStream( |

3.2.3.5 endConfigure

1 | binder::Status CameraDeviceClient::endConfigure(int operatingMode, |

3.2.3.6 小结

cancelRequest逻辑比较简单,对应的方法是CameraDeviceClient的cancelRequest方法,在该方法中会去通知Camera3Device将RequestThread中的Request队列清空,停止Request的继续下发。

beginConfigure方法是空实现。

deleteStream/createStream 分别是用于删除之前的数据流以及为新的操作创建数据流。

紧接着调用位于整个调用流程的末尾endConfigure方法,该方法对应着CameraDeviceClient的endConfigure方法,其逻辑比较简单,在该方法中会调用Camera3Device的configureStreams的方法,而该方法又会去通过ICameraDeviceSession的configureStreams_3_4的方法最终将需求传递给Provider。

到这里整个数据流已经配置完成,并且App也获取了Framework中的CameraCaptureSession对象,之后便可进行图像需求的下发了,在下发之前需要先创建一个Request,而App通过调用CameraDeviceImpl中的createCaptureRequest来实现,该方法在Framework中实现,内部会再去调用Camera Service中的AIDL接口createDefaultRequest,该接口的实现是CameraDeviceClient,在其内部又会去调用Camera3Device的createDefaultRequest方法,最后通过ICameraDeviceSession代理的constructDefaultRequestSettings方法将需求下发到Provider端去创建一个默认的Request配置,一旦操作完成,Provider会将配置上传至Service,进而给到App中。

3.2.4 处理图像需求

3.2.4.1 createDefaultRequest

1 | // Create a request object from a template. |

3.2.4.2 createDefaultRequest

1 | status_t Camera3Device::createDefaultRequest(int templateId, |

3.2.4.3 submitRequestList

1 | binder::Status CameraDeviceClient::submitRequestList( |

3.2.4.4 setStreamingRequestList

1 | status_t Camera3Device::setStreamingRequestList( |

3.2.4.5 sendRequestsBatch

1 | bool Camera3Device::RequestThread::sendRequestsBatch() { |

3.2.4.6 processBatchCaptureRequests

1 | status_t Camera3Device::HalInterface::processBatchCaptureRequests( |

3.2.4.7 小结

在创建Request成功之后,便可下发图像采集需求了,这里大致分为两个流程,一个是预览,一个拍照,两者差异主要体现在Camera Service中针对Request获取优先级上,一般拍照的Request优先级高于预览,具体表现是当预览Request在不断下发的时候,来了一次拍照需求,在Camera3Device 的RequestThread线程中,会优先下发此次拍照的Request。这里我们主要梳理下下发拍照request的大体流程:

下发拍照Request到Camera Service,其操作主要是由CameraDevcieClient的submitRequestList方法来实现,在该方法中,会调用Camera3Device的setStreamingRequestList方法,将需求发送到Camera3Device中,而Camera3Device将需求又加入到RequestThread中的RequestQueue中,并唤醒RequestThread线程,在该线程被唤醒后,会从RequestQueue中取出Request,通过之前获取的ICameraDeviceSession代理的processCaptureRequest_3_4方法将需求发送至Provider中,由于谷歌对于processCaptureRequest_3_4的限制,使其必须是非阻塞实现,所以一旦发送成功,便立即返回,在App端便等待这结果的回传。

3.2.5 接收图像结果

针对结果的获取是通过异步实现,主要分别两个部分,一个是事件的回传,一个是数据的回传,而数据中又根据流程的差异主要分为Meta Data和Image Data两个部分,接下来我们详细介绍下:

在下发Request之后,首先从Provider端传来的是Shutter Notify

3.2.5.1 notify

1 | void Camera3Device::notify(const camera3_notify_msg *msg) { |

3.2.5.2 Camera3Device::notifyShutter

1 | void Camera3Device::notifyShutter(const camera3_shutter_msg_t &msg, |

3.2.5.3 CameraDeviceClient::notifyShutter

1 | void CameraDeviceClient::notifyShutter(const CaptureResultExtras& resultExtras, |

3.2.5.4 sendCaptureResult

1 | void Camera3Device::sendCaptureResult(CameraMetadata &pendingMetadata, |

3.2.5.5 insertResultLocked

1 | void Camera3Device::insertResultLocked(CaptureResult *result, |

3.2.5.6 processNewFrames

1 | bool FrameProcessorBase::threadLoop() { |

3.2.5.7 processSingleFrame

1 | bool FrameProcessorBase::processSingleFrame(CaptureResult &result, |

3.2.5.8 returnOutputBuffers

1 | void Camera3Device::returnOutputBuffers( |

3.2.5.9 小结

在下发Request之后,首先从Provider端传来的是Shutter Notify,因为之前已经将Camera3Device作为ICameraDeviceCallback的实现传入Provider中,所以此时会调用Camera3Device的notify方法将事件传入Camera Service中,紧接着通过层层调用,将事件通过CameraDeviceClient的notifyShutter方法发送到CameraDeviceClient中,之后又通过打开相机设备时传入的Framework的CameraDeviceCallbacks接口的onCaptureStarted方法将事件最终传入Framework,进而给到App端。

在Shutter事件上报完成之后,当一旦有Meta Data生成,Camera Provider便会通过ICameraDeviceCallback的processCaptureResult_3_4方法将数据给到Camera Service,而该接口的实现对应的是Camera3Device的processCaptureResult_3_4方法,在该方法会通过层层调用,调用sendCaptureResult方法将Result放入一个mResultQueue中,并且通知FrameProcessorBase的线程去取出Result,并且将其发送至CameraDeviceClient中,之后通过内部的CameraDeviceCallbacks远程代理的onResultReceived方法将结果上传至Framework层,进而给到App中进行处理。

随后Image Data前期也会按照类似的流程走到Camera3Device中,但是会通过调用returnOutputBuffers方法将数据给到Camera3OutputStream中,而该Stream中会通过BufferQueue这一生产者消费者模式中的生产者的queue方法通知消费者对该buffer进行消费,而消费者正是App端的诸如ImageReader等拥有Surface的类,最后App便可以将图像数据取出进行后期处理了。

四、总结

初代Android相机框架中,Camera Service层就已经存在了,主要用于向上与Camera Framework保持低耦合关联,承接其图像请求,内部封装了Camera Hal Module模块,通过HAL接口对其进行控制,所以该层从一开始就是谷歌按照分层思想,将硬件抽象层抽离出来放入Service中进行管理,这样的好处显而易见,将平台厂商实现的硬件抽象层与系统层解耦,独立进行控制。之后随着谷歌将平台厂商的实现放入vendor分区中,彻底将系统与平台厂商在系统分区上保持了隔离,此时,谷歌便顺势将Camera HAL Moudle从Camera Service中解耦出来放到了vendor分区下的独立进程Camera Provider中,所以之后,Camera Service 的职责便是承接来自Camera Framework的请求,之后将请求转发至Camera Provider中,作为一个中转站的角色存在在系统中。